Advanced use cases

Special data formats

There are many options available for you to use when you have a scenario when data has to be a certain format.

- Create expression datafaker

- Can be used to create names, addresses, or anything that can be found under here

- Create regex

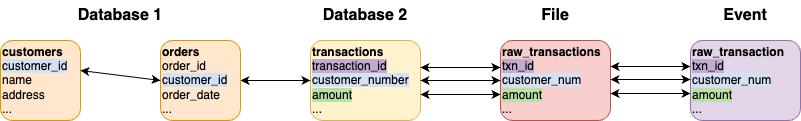

Foreign keys across data sets

Details for how you can configure foreign keys can be found here.

Edge cases

For each given data type, there are edge cases which can cause issues when your application processes the data. This can be controlled at a column level by including the following flag in the generator options:

field()

.name("amount")

.type(DoubleType.instance())

.enableEdgeCases(true)

.edgeCaseProbability(0.1)

field

.name("amount")

.`type`(DoubleType)

.enableEdgeCases(true)

.edgeCaseProbability(0.1)

fields:

- name: "amount"

type: "double"

generator:

type: "random"

options:

enableEdgeCases: "true"

edgeCaseProb: 0.1

If you want to know all the possible edge cases for each data type, can check the documentation here.

Scenario testing

You can create specific scenarios by adjusting the metadata found in the plan and tasks to your liking.

For example, if you had two data sources, a Postgres database and a parquet file, and you wanted to save account data

into Postgres and transactions related to those accounts into a parquet file.

You can alter the status column in the account data to only generate open accounts

and define a foreign key between Postgres and parquet to ensure the same account_id is being used.

Then in the parquet task, define 1 to 10 transactions per account_id to be generated.

Postgres account generation example task

Parquet transaction generation example task

Plan

Cloud storage

Data source

If you want to save the file types CSV, JSON, Parquet or ORC into cloud storage, you can do so via adding extra configurations. Below is an example for S3.

var csvTask = csv("my_csv", "s3a://my-bucket/csv/accounts")

.schema(

field().name("account_id"),

...

);

var s3Configuration = configuration()

.runtimeConfig(Map.of(

"spark.hadoop.fs.s3a.directory.marker.retention", "keep",

"spark.hadoop.fs.s3a.bucket.all.committer.magic.enabled", "true",

"spark.hadoop.fs.defaultFS", "s3a://my-bucket",

//can change to other credential providers as shown here

//https://hadoop.apache.org/docs/stable/hadoop-aws/tools/hadoop-aws/index.html#Changing_Authentication_Providers

"spark.hadoop.fs.s3a.aws.credentials.provider", "org.apache.hadoop.fs.s3a.SimpleAWSCredentialsProvider",

"spark.hadoop.fs.s3a.access.key", "access_key",

"spark.hadoop.fs.s3a.secret.key", "secret_key"

));

execute(s3Configuration, csvTask);

val csvTask = csv("my_csv", "s3a://my-bucket/csv/accounts")

.schema(

field.name("account_id"),

...

)

val s3Configuration = configuration

.runtimeConfig(Map(

"spark.hadoop.fs.s3a.directory.marker.retention" -> "keep",

"spark.hadoop.fs.s3a.bucket.all.committer.magic.enabled" -> "true",

"spark.hadoop.fs.defaultFS" -> "s3a://my-bucket",

//can change to other credential providers as shown here

//https://hadoop.apache.org/docs/stable/hadoop-aws/tools/hadoop-aws/index.html#Changing_Authentication_Providers

"spark.hadoop.fs.s3a.aws.credentials.provider" -> "org.apache.hadoop.fs.s3a.SimpleAWSCredentialsProvider",

"spark.hadoop.fs.s3a.access.key" -> "access_key",

"spark.hadoop.fs.s3a.secret.key" -> "secret_key"

))

execute(s3Configuration, csvTask)

folders {

generatedPlanAndTaskFolderPath = "s3a://my-bucket/data-caterer/generated"

planFilePath = "s3a://my-bucket/data-caterer/generated/plan/customer-create-plan.yaml"

taskFolderPath = "s3a://my-bucket/data-caterer/generated/task"

}

runtime {

config {

...

#S3

"spark.hadoop.fs.s3a.directory.marker.retention" = "keep"

"spark.hadoop.fs.s3a.bucket.all.committer.magic.enabled" = "true"

"spark.hadoop.fs.defaultFS" = "s3a://my-bucket"

#can change to other credential providers as shown here

#https://hadoop.apache.org/docs/stable/hadoop-aws/tools/hadoop-aws/index.html#Changing_Authentication_Providers

"spark.hadoop.fs.s3a.aws.credentials.provider" = "org.apache.hadoop.fs.s3a.SimpleAWSCredentialsProvider"

"spark.hadoop.fs.s3a.access.key" = "access_key"

"spark.hadoop.fs.s3a.secret.key" = "secret_key"

}

}

Storing plan/task(s)

You can generate and store the plan/task files inside either AWS S3, Azure Blob Storage or Google GCS.

This can be controlled via configuration set in the application.conf file where you can set something like the below:

configuration()

.generatedReportsFolderPath("s3a://my-bucket/data-caterer/generated")

.planFilePath("s3a://my-bucket/data-caterer/generated/plan/customer-create-plan.yaml")

.taskFolderPath("s3a://my-bucket/data-caterer/generated/task")

.runtimeConfig(Map.of(

"spark.hadoop.fs.s3a.directory.marker.retention", "keep",

"spark.hadoop.fs.s3a.bucket.all.committer.magic.enabled", "true",

"spark.hadoop.fs.defaultFS", "s3a://my-bucket",

//can change to other credential providers as shown here

//https://hadoop.apache.org/docs/stable/hadoop-aws/tools/hadoop-aws/index.html#Changing_Authentication_Providers

"spark.hadoop.fs.s3a.aws.credentials.provider", "org.apache.hadoop.fs.s3a.SimpleAWSCredentialsProvider",

"spark.hadoop.fs.s3a.access.key", "access_key",

"spark.hadoop.fs.s3a.secret.key", "secret_key"

));

configuration

.generatedReportsFolderPath("s3a://my-bucket/data-caterer/generated")

.planFilePath("s3a://my-bucket/data-caterer/generated/plan/customer-create-plan.yaml")

.taskFolderPath("s3a://my-bucket/data-caterer/generated/task")

.runtimeConfig(Map(

"spark.hadoop.fs.s3a.directory.marker.retention" -> "keep",

"spark.hadoop.fs.s3a.bucket.all.committer.magic.enabled" -> "true",

"spark.hadoop.fs.defaultFS" -> "s3a://my-bucket",

//can change to other credential providers as shown here

//https://hadoop.apache.org/docs/stable/hadoop-aws/tools/hadoop-aws/index.html#Changing_Authentication_Providers

"spark.hadoop.fs.s3a.aws.credentials.provider" -> "org.apache.hadoop.fs.s3a.SimpleAWSCredentialsProvider",

"spark.hadoop.fs.s3a.access.key" -> "access_key",

"spark.hadoop.fs.s3a.secret.key" -> "secret_key"

))

folders {

generatedPlanAndTaskFolderPath = "s3a://my-bucket/data-caterer/generated"

planFilePath = "s3a://my-bucket/data-caterer/generated/plan/customer-create-plan.yaml"

taskFolderPath = "s3a://my-bucket/data-caterer/generated/task"

}

runtime {

config {

...

#S3

"spark.hadoop.fs.s3a.directory.marker.retention" = "keep"

"spark.hadoop.fs.s3a.bucket.all.committer.magic.enabled" = "true"

"spark.hadoop.fs.defaultFS" = "s3a://my-bucket"

#can change to other credential providers as shown here

#https://hadoop.apache.org/docs/stable/hadoop-aws/tools/hadoop-aws/index.html#Changing_Authentication_Providers

"spark.hadoop.fs.s3a.aws.credentials.provider" = "org.apache.hadoop.fs.s3a.SimpleAWSCredentialsProvider"

"spark.hadoop.fs.s3a.access.key" = "access_key"

"spark.hadoop.fs.s3a.secret.key" = "secret_key"

}

}